When testing a captive portal with a virtual station on a LANforge machine, you are faced with a problem of multiple gateways. When the station associates, it gains a DHCP address with a new gateway and DNS server. Your LANforge machine will already have a default gateway and DNS server assigned to it throught the management interface it has on boot. You need to use both. On top of that, you are connected via screen-sharing to a laptop and not to the LANforge machine directly.

When You Have a Serial Console

If you’re lucky, you have a working serial console connection between the screen-share laptop and the LANforge system. I mention working because sometimes the quality of USB serial adapters is spotty. When it works, you have a way to set new values into /etc/resolv.conf and a default gateway.

Getting the station information via the command-line is something you can do using the lf_portmod.pl script:

/home/lanforge/scripts $ ./lf_portmod.pl --manager localhost \

--card 1 --quiet yes \

--port_name wlan0 --show_port

>>RSLT: 0 Cmd: 'nc_show_port' '1' '1' 'wlan0'

Shelf: 1, Card: 1, Port: 3 Type: STA Alias:

...

IP: 10.45.1.2 MASK: 255.255.255.0 GW: 10.0.0.1 VID: 0 ResetState: COMPLETE

DNS Servers: 8.8.8.8

IPv6-Global: DELETED

IPv6-Link: fe80::a00:27ff:fe0a:973d/64

IPv6-Gateway: DELETED

MAC: 08:00:27:0a:97:3d DEV: wlan0 MTU: 1500 TX Queue Len: 1000

...

Note down your existing route information:

$ route -n > existing_route.txt

Set your route to the laptop so you don’t loose it. Then update your new route:

$ sudo route add -host $laptop-ip dev eth0

$ sudo route set default gw 10.0.0.1

Next, re-order the entries in your /etc/resolv.conf file:

nameserver: 176.168.1.1

nameserver: 8.8.8.8

# nameserver: 176.168.1.1

And that should allow your browser to work well enough. If you fat-finger the routes, you can renew the DHCP address on your management port (eth0) using the ifup/ifdown commands:

$ sudo ifdown eth0; sudo ip a flush eth0; sudo ifup eth0

And that should restore your management connection to what you had during boot up.

Often–No Serial Console

The problem is, everything your browser will want to do will use your default gateway, not the virtual stations. Changing the default gateway will drop your connection to the LANforge machine and you will be lost from it. When you are developing remotely, you can waste days waiting for your remote counterpart doing hands-on to reboot the LANforge when you lose the network settings. Luckily, LANforge boxes come with squid and dnsmasq installed.

Starting with dnsmasq

We can start by setting up dnsmasq, so that we can direct DNS requests through the stations DNS setting.

- Update /etc/resolv.conf to point at localhost:

nameserver 127.0.0.1

# old nameserver:

nameserver: 176.168.1.1

- Update the /etc/dnsmasq.conf to query the station’s DNS server:

server=8.8.8.8

Then start dnsmasq:

$ sudo systemctl start dnsmasq

Tail your system logs to check for typos:

$ journalctl -f

Test a DNS query:

$ host www.cnn.com 127.0.0.1

Using domain server:

Name: 127.0.0.1

Address: 127.0.0.1#53

Aliases:

www.cnn.com is an alias for turner-tls.map.fastly.net.

turner-tls.map.fastly.net has address 151.101.1.67

turner-tls.map.fastly.net has address 151.101.65.67

turner-tls.map.fastly.net has address 151.101.129.67

turner-tls.map.fastly.net has address 151.101.193.67

turner-tls.map.fastly.net has IPv6 address 2a04:4e42::323

turner-tls.map.fastly.net has IPv6 address 2a04:4e42:200::323

turner-tls.map.fastly.net has IPv6 address 2a04:4e42:400::323

turner-tls.map.fastly.net has IPv6 address 2a04:4e42:600::323

Do not enable dnsmasq using $ sudo systemctl enable dnsmasq; sudo systemctl daemon-reload. That will take effect after a reboot and you might not remember doing it. Please remember to comment out 127.0.0.1 in /etc/resolv.conf.

Move onto squid

We want to set the tcp_outgoing_address setting in our /etc/squid/squid.conf file.

tcp_outgoing_address 10.45.1.2

Start squid:

$ sudo systemctl start squid

Open a terminal and tail squid logs:

$ cd /var/log/squid; sudo tail -F *log

Next configure FireFox

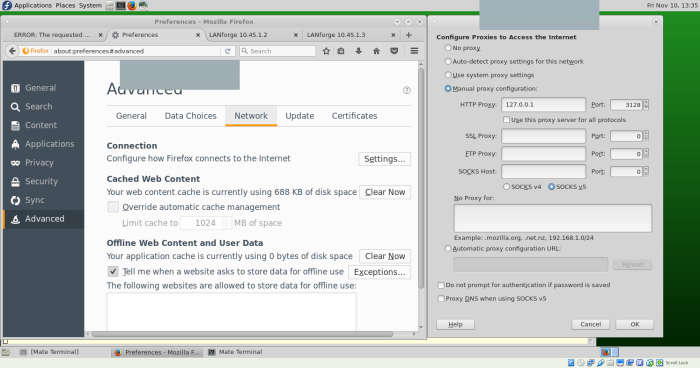

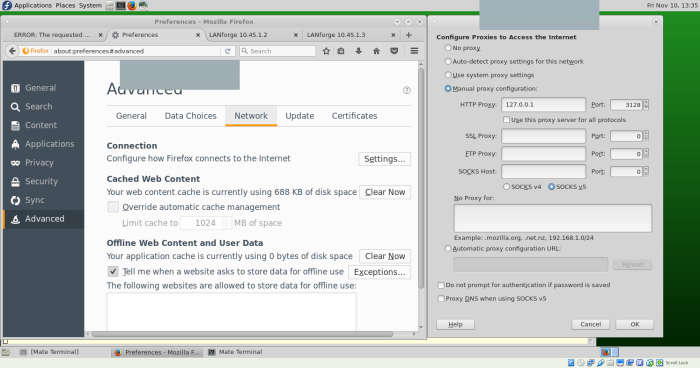

The Network settings in FireFox are under Edit→Preferences and Advanced→Network. Edit the HTTP proxy to be 127.0.0.1 and port 3128. Erase the values for No Proxy for because having 127.0.0.1 and localhost in there will force FireFox to make no connections whatsoever.

Verify with tcpdump

Now the fun begins! We want to see web traffic and DNS queries flowing out the station interface and NOT the management interface. Open two windows, for two different tcpdump sessions. Monitor the management port:

$ sudo tcpdump -eni eth0 port 80 or port 53 or port 443

And monitor the station:

$ sudo tcpdump -eni wlan0 port 80 or port 53 or port 443

Using curl

to watch for redirects

Capturing a captive portal redirect is usually a matter of sending any HTTP request over the station and the response will be a 302 (or 307) redirect.

$ source ~/lanforge.profile # sets path

$ which curl # should be /home/lanforce/local/bin/curl

$ curl -svLki -4

--interface wlan0 \

--localaddr 10.45.1.2 \

--dns-interface wlan0 \

--dns-ipv4-addr 10.45.1.2 \

--dns-servers 8.8.8.8 \

http://1.1.1.1/

Use the portal-check.pl script

In the /home/lanforge/scripts directory, you might have a portal-check.pl script. This script performs most of the outlines steps discussed so far. It is useful for helping validate the environment and gathering data to use with portal-bot.bash.

A response looks like:

< Location: http://portal.example.com:8080/

< Content-Type: text/html; charset=UTF-8

< Date: Fri, 10 Nov 2017 22:05:01 GMT

< Expires: Sun, 10 Dec 2017 22:05:01 GMT

< Cache-Control: public, max-age=2592000

* Server gws is not blacklisted

< Server: gws

< Content-Length: 219

< X-XSS-Protection: 1; mode=block

< X-Frame-Options: SAMEORIGIN

<

<HTML><HEAD><meta http-equiv="content-type" content="text/html;charset=utf-8">

<TITLE>301 Moved</TITLE></HEAD><BODY>

<H1>301 Moved</H1>

The document has moved

<A HREF="http://portal.example.com:8080/">here</A>.

</BODY></HTML>

When diagnosing behavior of captive portals, often they are constructed to redirect to arbitrary high-level ports. Feel free to add more ports to your tcpdump filter. But at this point, curl is probably going to be your best friend.

#

Doing any WLAN or wired network testing?

Doing any WLAN or wired network testing?